如何用Python抓取雅虎财经数据

从雅虎财经抓取网络数据可能很困难,原因有三个:

- 它具有复杂的 HTML 结构

- 经常更新

- 它需要精确的 CSS 或 XPath 选择器

本教程将分步骤指导您如何使用Python解决这些问题。您会发现这个教程通俗易懂,最终您将得到一个完整的代码,能够从Yahoo Finance中提取您需要的财务数据。

雅虎财经 (Yahoo Finance) 允许抓取数据吗?

您通常能够抓取Yahoo Finance网站上的大部分公开数据。但若要进行合规的网络爬虫操作,请务必遵守其服务条款,同时注意不要使其服务器过载。

如何使用 Python 爬取雅虎财经数据

按照这个分步教程学习,您将掌握如何使用Python为Yahoo Finance开发网络爬虫工具。

1. 设置和先决条件

在开始使用 Python 抓取 Yahoo Finance 数据之前,请确保你的开发环境已准备就绪:

- 安装 Python :从Python 官方网站下载并安装最新版本的 Python 。

- 选择 IDE:使用 PyCharm、Visual Studio Code 或 Jupyter Notebook 等 IDE 进行开发工作。

- 基本知识:确保您了解CSS 选择器并能够熟练使用浏览器 DevTools 检查页面元素。

接下来,使用Poetry创建一个新项目:

poetry new yahoo-finance-scraper壳复制

该命令将生成以下项目结构:

yahoo-finance-scraper/

├── pyproject.toml

├── README.md

├── yahoo_finance_scraper/

│ └── __init__.py

└── tests/

└── __init__.pyMarkdown复制

导航到项目目录并安装 Playwright:

cd yahoo-finance-scraper

poetry add playwright

poetry run playwright install壳复制

Yahoo Finance 使用 JavaScript 动态加载内容。Playwright 可以呈现 JavaScript,因此适合从 Yahoo Finance 抓取动态内容。

打开该pyproject.toml文件来检查你的项目的依赖项,其中应该包括:

[tool.poetry.dependencies]

python = "^3.12"

playwright = "^1.46.0"托木斯克复制

最后,在文件夹中创建一个main.py文件yahoo_finance_scraper来编写您的抓取逻辑。

更新后的项目结构应如下所示:

yahoo-finance-scraper/

├── pyproject.toml

├── README.md

├── yahoo_finance_scraper/

│ ├── __init__.py

│ └── main.py

└── tests/

└── __init__.pyMarkdown复制

您的环境现已设置好,您可以开始编写 Python Playwright 代码来抓取雅虎财经了。

注意:如果您不想在本地机器上设置所有这些,您可以直接在

Apify上部署代码。在本教程的后面,我将向您展示如何在 Apify 上部署和运行您的抓取工具。

2. 连接到目标 Yahoo 财经页面

首先,让我们使用 Playwright 启动 Chromium 浏览器实例。虽然 Playwright 支持各种浏览器引擎,但在本教程中我们将使用 Chromium:

from playwright.async_api import async_playwright, Playwright

async def main():

async with async_playwright() as playwright:

browser = await playwright.chromium.launch(headless=False)# Launch a Chromium browser

context = await browser.new_context()

page = await context.new_page()if __name__ == "__main__":

asyncio.run(main())```

Python复制

要运行此脚本,您需要main()在脚本末尾使用事件循环执行该函数。

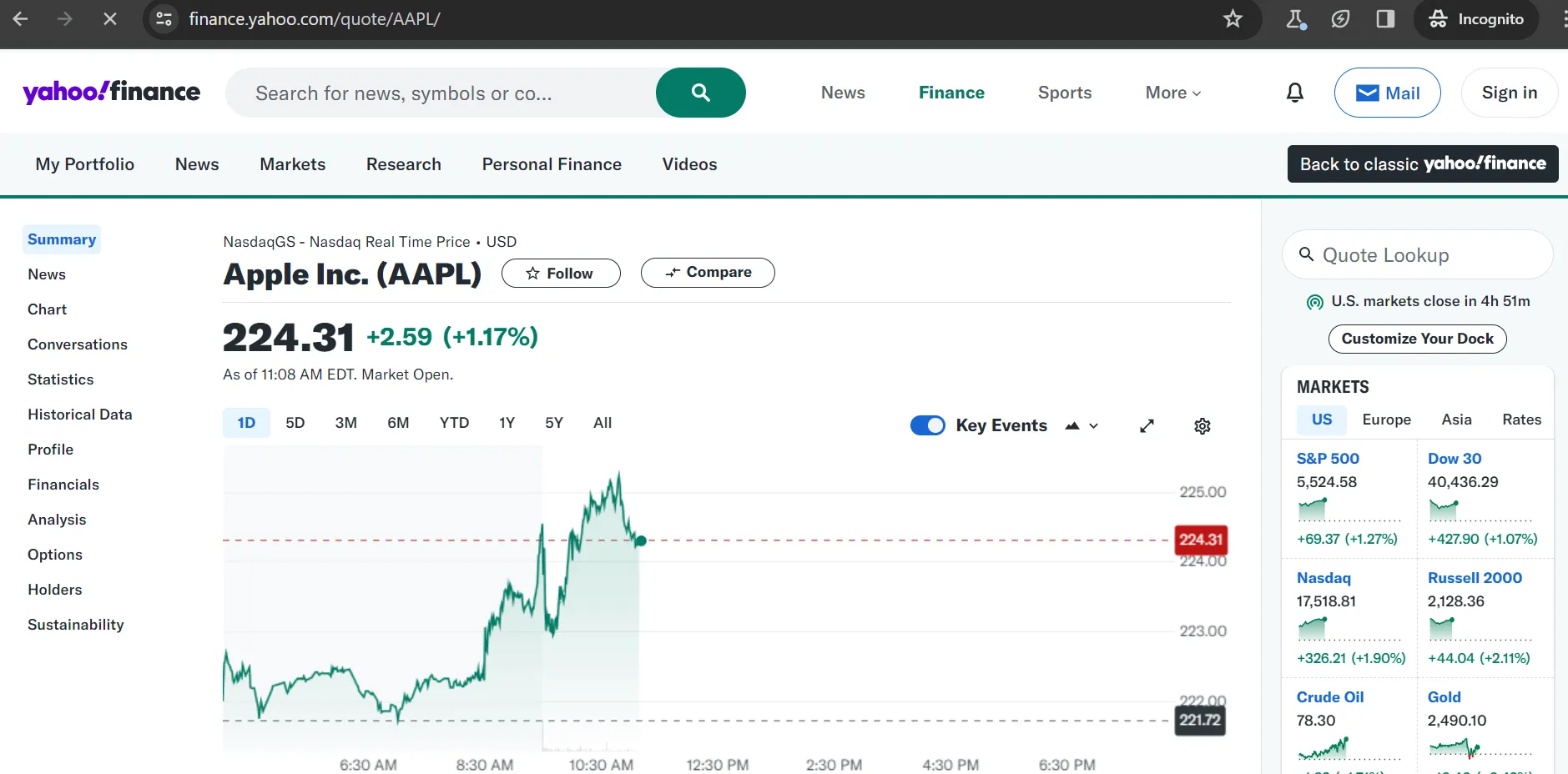

接下来,导航到要抓取的股票的 Yahoo Finance 页面。Yahoo Finance 股票页面的 URL 如下所示:

https://finance.yahoo.com/quote/{ticker_symbol}

壳复制

股票代码是用于识别证券交易所上市公司的唯一代码,例如AAPLApple Inc. 或TSLATesla, Inc.。股票代码发生变化时,URL 也会发生变化。因此,您应将其替换{ticker_symbol}为要抓取的特定股票代码。

async def main():

async with async_playwright() as playwright:

…

ticker_symbol = "AAPL"Replace with the desired ticker symbol

yahoo_finance_url = f"https://finance.yahoo.com/quote/{ticker_symbol}"

await page.goto(yahoo_finance_url)Navigate to the Yahoo Finance page

if name == "main":

asyncio.run(main())

Python复制

以下是迄今为止的完整脚本:async def main():

async with async_playwright() as playwright:

Launch a Chromium browser

browser = await playwright.chromium.launch(headless=False)

context = await browser.new_context()

page = await context.new_page()

ticker_symbol = "AAPL"Replace with the desired ticker symbol

yahoo_finance_url = f"https://finance.yahoo.com/quote/{ticker_symbol}"

await page.goto(yahoo_finance_url)Navigate to the Yahoo Finance page

Wait for a few seconds

await asyncio.sleep(3)Close the browser

await browser.close()if name == "main":

asyncio.run(main())

Python复制

当您运行此脚本时,它将打开 Yahoo Finance 页面几秒钟后才终止。

太棒了!现在,您只需更改股票代码即可抓取您选择的任何股票的数据。

__注意:__使用 UI ( headless=False) 启动浏览器非常适合测试和调试。如果您想节省资源并在后台运行浏览器,请切换到无头模式:

browser = await playwright.chromium.launch(headless=True)

Python复制

### 3. 绕过 cookies 模式

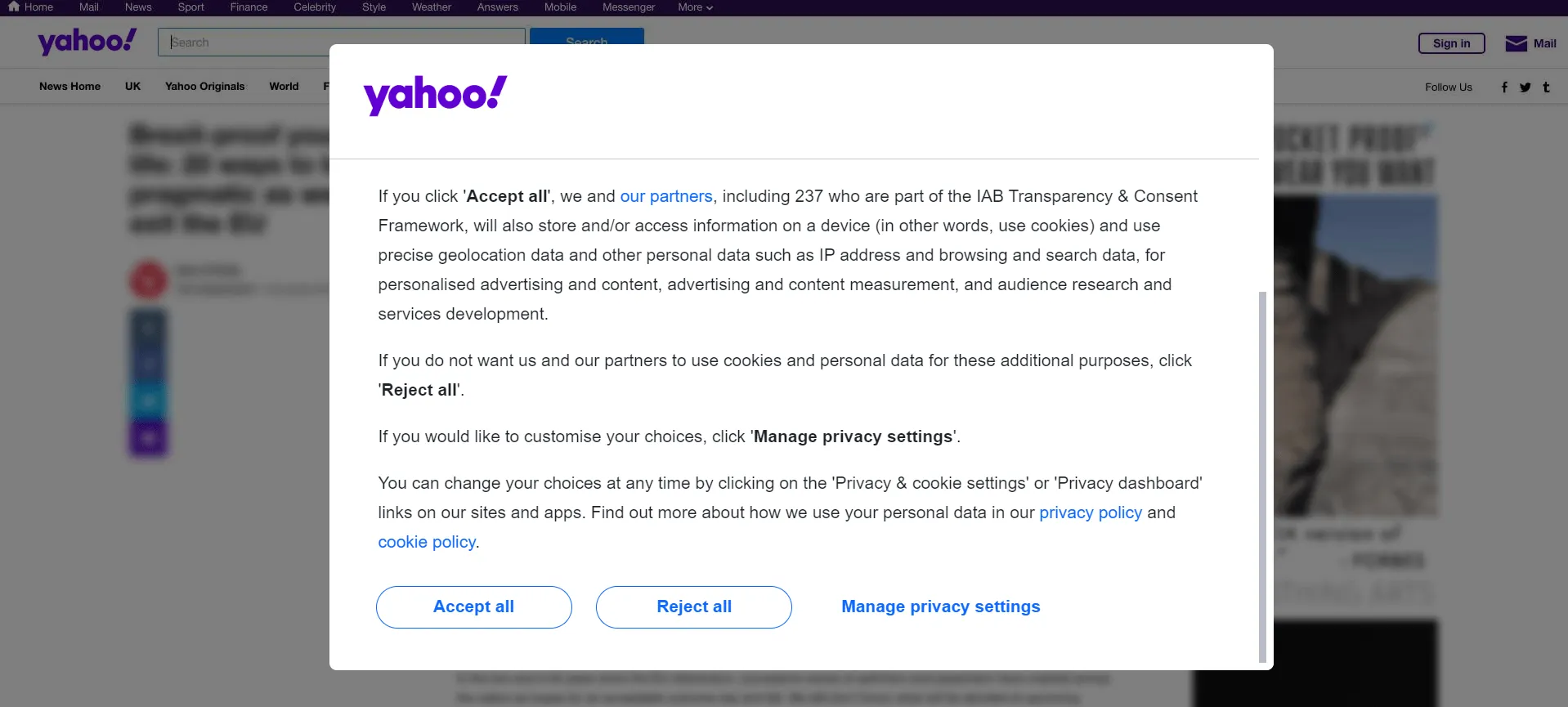

当从欧洲 IP 地址访问雅虎财经时,您可能会遇到一个需要先解决的 cookie 同意模式,然后才能继续抓取数据。

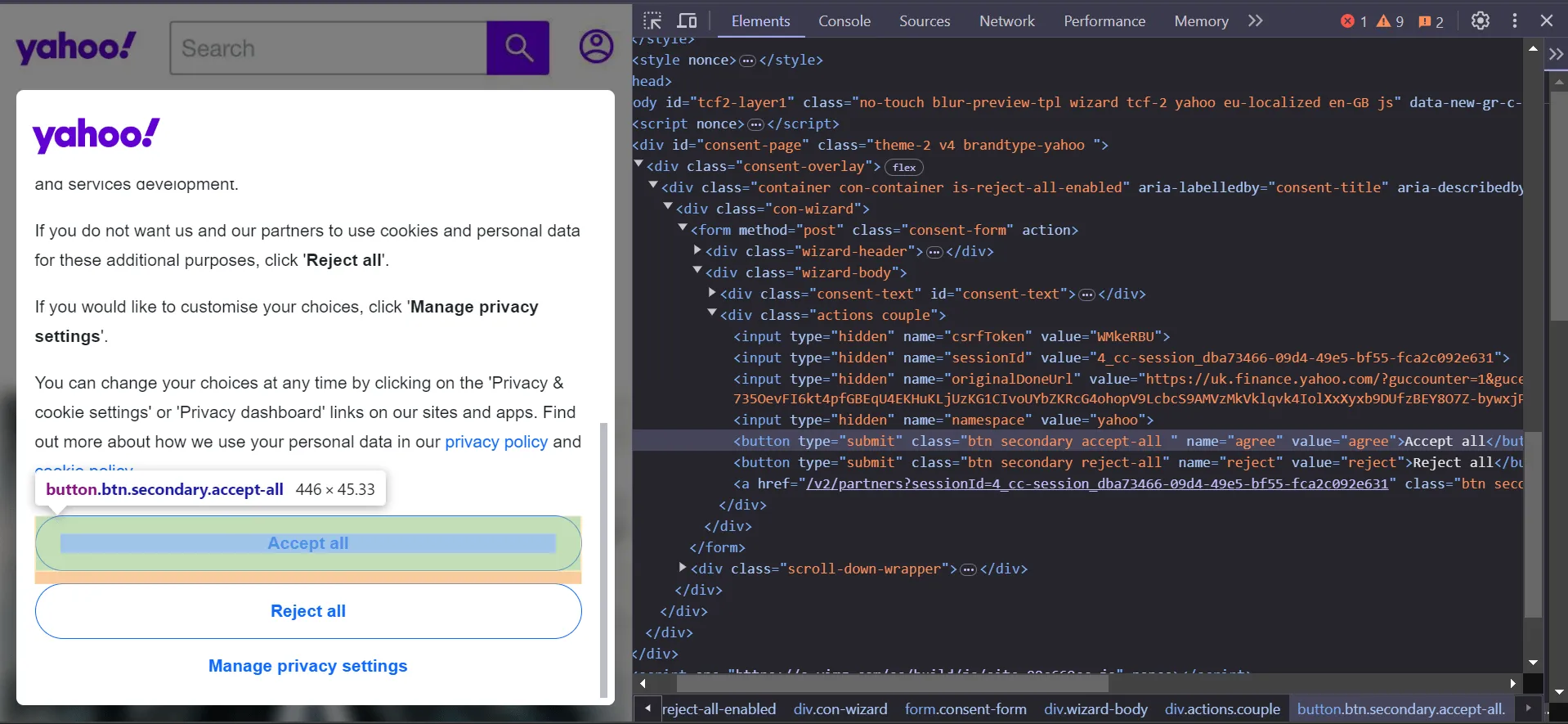

要继续访问所需页面,您需要通过点击“全部接受”或“全部拒绝”与模式进行交互。为此,请右键单击“全部接受”按钮并选择“检查”以打开浏览器的 DevTools:

在 DevTools 中,您可以看到可以使用以下 CSS 选择器选择该按钮:button.accept-all

CSS复制

要在 Playwright 中自动单击此按钮,您可以使用以下脚本:async def main():

async with async_playwright() as playwright:

browser = await playwright.chromium.launch(headless=False)

context = await browser.new_context()

page = await context.new_page()

ticker_symbol = "AAPL"

url = f"https://finance.yahoo.com/quote/{ticker_symbol}"

await page.goto(url)

try:Click the "Accept All" button to bypass the modal

await page.locator("button.accept-all").click()

except:

pass

await browser.close()Run the main function

if name == "main":

asyncio.run(main())

Python复制

如果出现 Cookie 同意模式,此脚本将尝试单击“全部接受”按钮。这样您就可以继续抓取而不会中断。

### 4. 检查页面以选择要抓取的元素

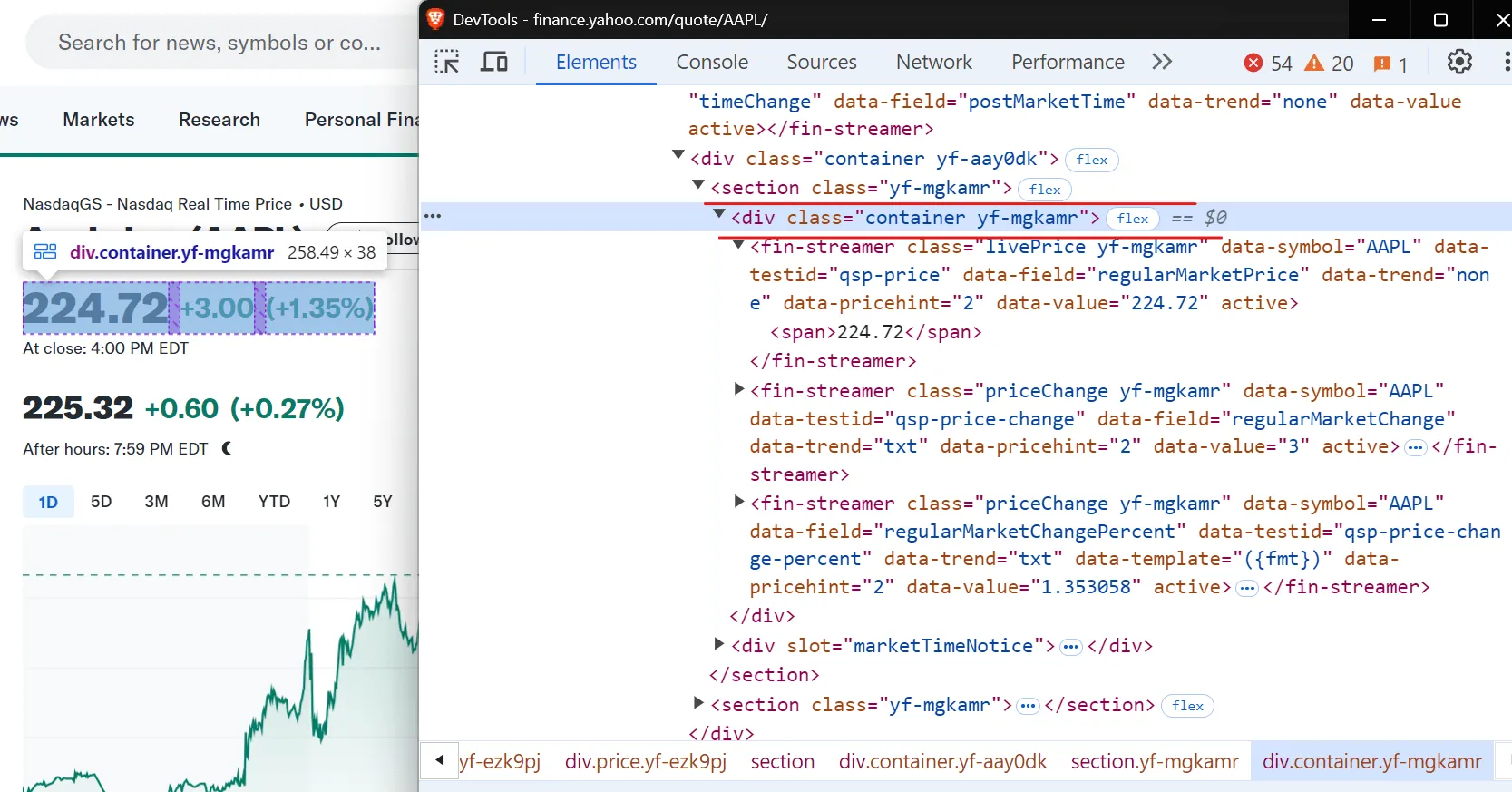

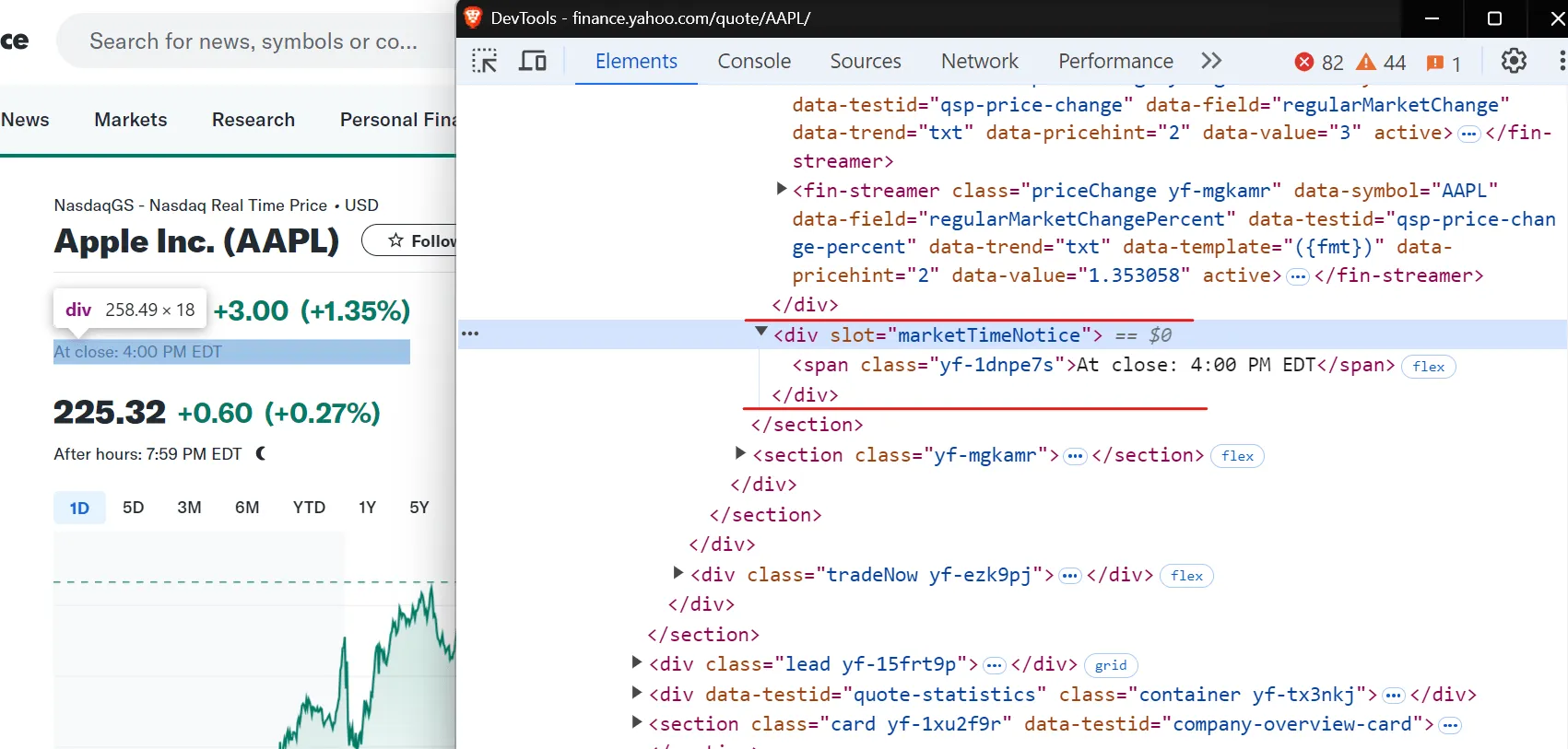

要有效地抓取数据,首先需要了解网页的 DOM 结构。假设您要提取常规市场价格 (224.72)、变化 (+3.00) 和变化百分比 (+1.35%)。这些值都包含在一个div元素中。在这个元素中div,您会发现三个fin-streamer元素,每个元素分别代表市场价格、变化和百分比。

为了精确定位这些元素,您可以使用以下 CSS 选择器:

[data-testid="qsp-price"]

[data-testid="qsp-price-change"]

[data-testid="qsp-price-change-percent"]

纯文本复制

太棒了!接下来我们来看看如何提取收盘时间,页面上显示为“4 PM EDT”。

要选择收盘时间,请使用以下 CSS 选择器:div[slot="marketTimeNotice"] > span

纯文本复制

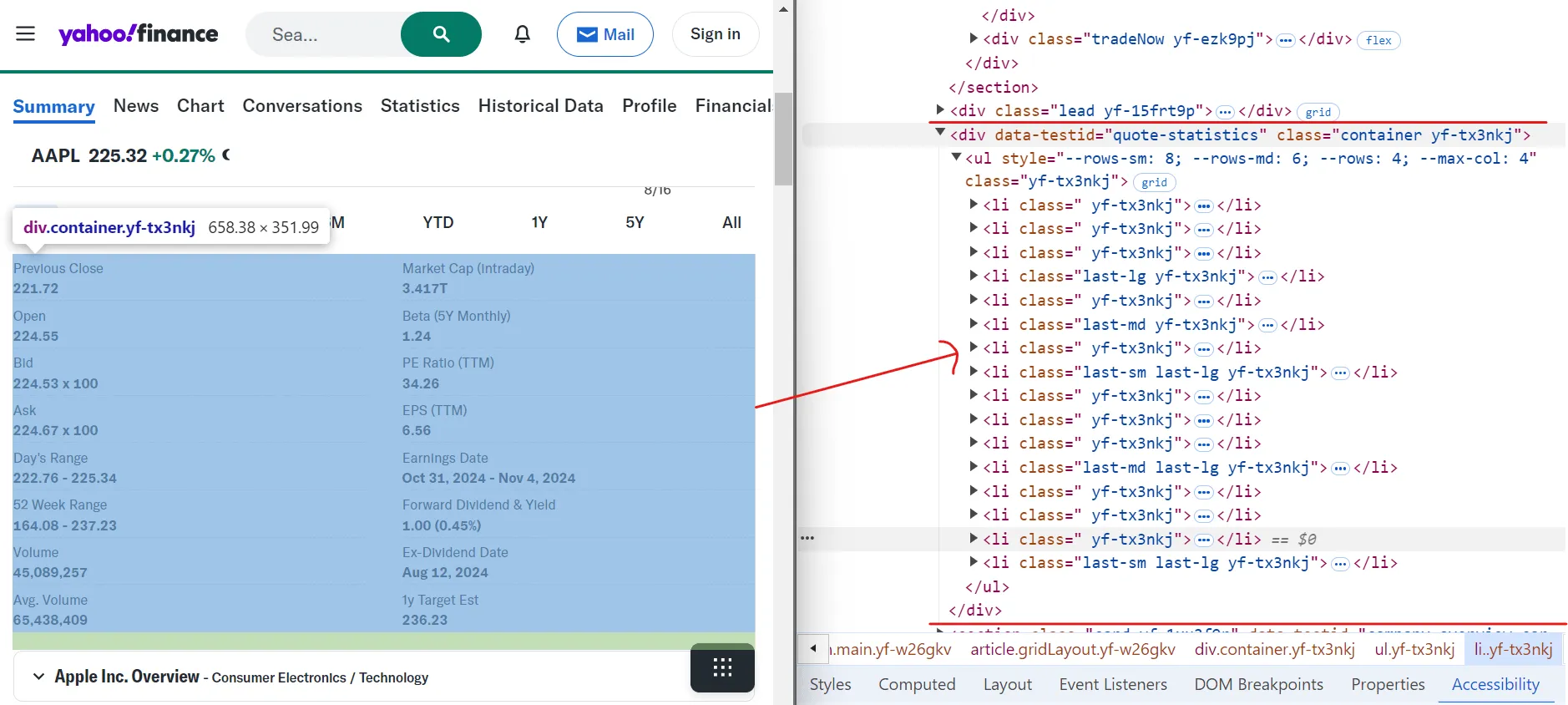

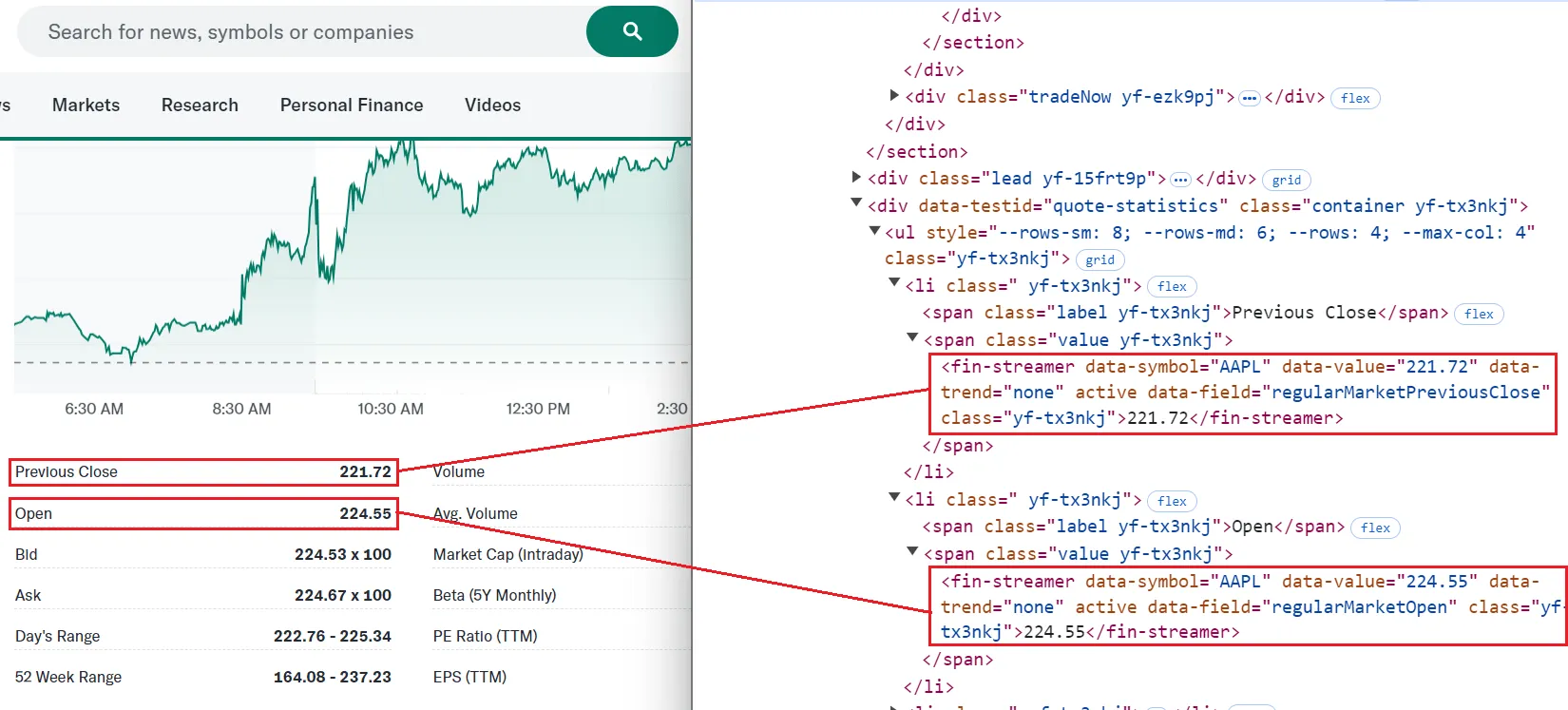

现在,让我们继续从表中提取关键的公司数据,如市值、前收盘价和交易量:

如您所见,数据结构为一个表格,其中有多个li标签代表每个字段,从“上次收盘价”开始到“1y Target Est”结束。

要提取特定字段(如“上次收盘价”和“开盘价”),可以使用data-field唯一标识每个元素的属性:

[data-field="regularMarketPreviousClose"]

[data-field="regularMarketOpen"]

纯文本复制

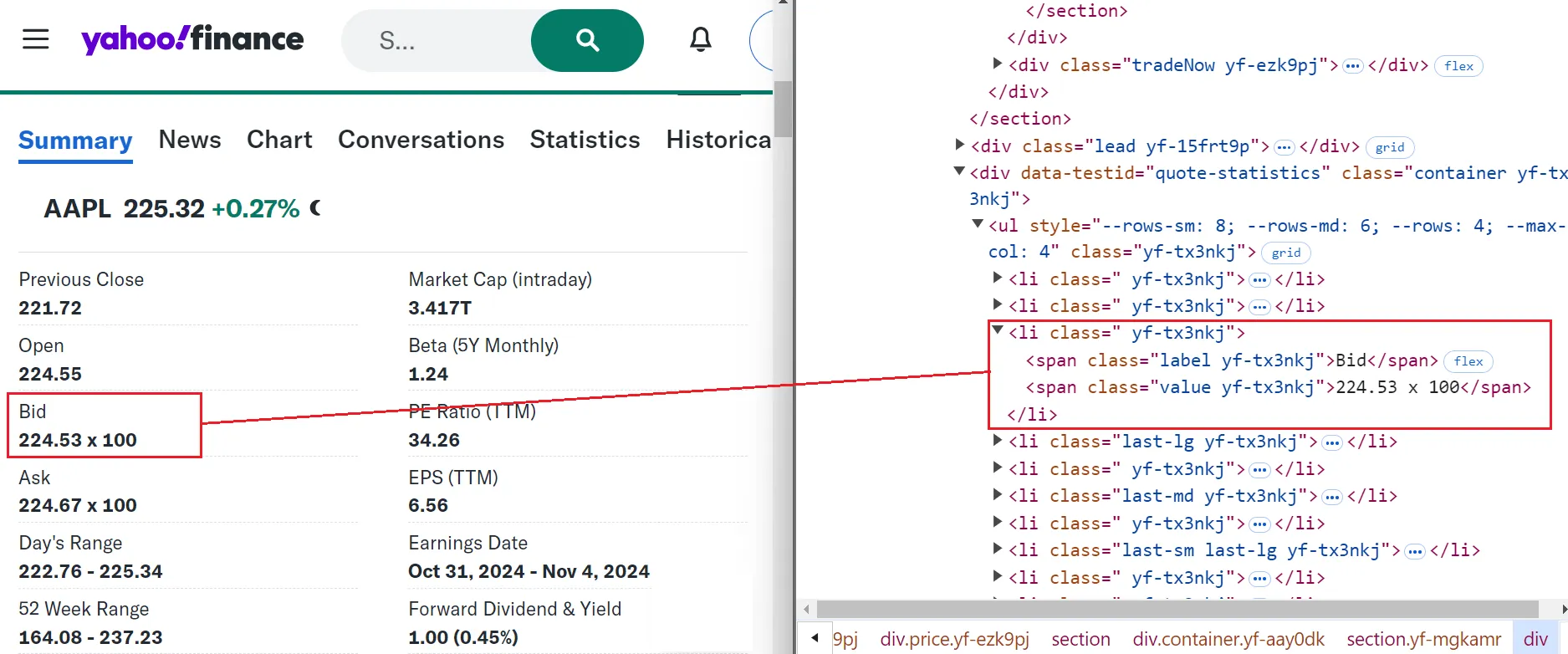

属性data-field提供了一种选择元素的简单方法。但是,在某些情况下,可能不存在这样的属性。例如,提取“Bid”值,该值缺少data-field属性或任何唯一标识符。在这种情况下,我们将首先使用其文本内容找到“Bid”标签,然后移至下一个同级元素以提取相应的值。

以下是您可以使用的组合选择器:

span:has-text(‘Bid’) + span.value

纯文本复制

### 5. 抓取股票数据

现在您已经确定了需要抓取的元素,接下来可以编写Playwright脚本来从Yahoo Finance提取数据了。

让我们定义一个名为 的新函数scrape_data来处理抓取过程。此函数接受股票代码,导航到 Yahoo Finance 页面,并返回包含提取的财务数据的字典。

工作原理如下:

async def scrape_data(playwright: Playwright, ticker: str) -> dict:

try:

Launch the browser in headless mode

browser = await playwright.chromium.launch(headless=True)

context = await browser.new_context()

page = await context.new_page()

url = f"https://finance.yahoo.com/quote/{ticker}"

await page.goto(url, wait_until="domcontentloaded")

try:Click the "Accept All" button if present

await page.locator("button.accept-all").click()

except:

passIf the button is not found, continue without any action

data = {"Ticker": ticker}Extract regular market values

data["Regular Market Price"] = await page.locator(

'[data-testid="qsp-price"]'

).text_content()

data["Regular Market Price Change"] = await page.locator(

'[data-testid="qsp-price-change"]'

).text_content()

data["Regular Market Price Change Percent"] = await page.locator(

'[data-testid="qsp-price-change-percent"]'

).text_content()Extract market close time

market_close_time = await page.locator(

'div[slot="marketTimeNotice"] > span'

).first.text_content()

data["Market Close Time"] = market_close_time.replace("At close: ", "")Extract other financial metrics

data["Previous Close"] = await page.locator(

'[data-field="regularMarketPreviousClose"]'

).text_content()

data["Open Price"] = await page.locator(

'[data-field="regularMarketOpen"]'

).text_content()

data["Bid"] = await page.locator(

"span:has-text('Bid') + span.value"

).text_content()

data["Ask"] = await page.locator(

"span:has-text('Ask') + span.value"

).text_content()

data["Day's Range"] = await page.locator(

'[data-field="regularMarketDayRange"]'

).text_content()

data["52 Week Range"] = await page.locator(

'[data-field="fiftyTwoWeekRange"]'

).text_content()

data["Volume"] = await page.locator(

'[data-field="regularMarketVolume"]'

).text_content()

data["Avg. Volume"] = await page.locator(

'[data-field="averageVolume"]'

).text_content()

data["Market Cap"] = await page.locator(

'[data-field="marketCap"]'

).text_content()

data["Beta"] = await page.locator(

"span:has-text('Beta (5Y Monthly)') + span.value"

).text_content()

data["PE Ratio"] = await page.locator(

"span:has-text('PE Ratio (TTM)') + span.value"

).text_content()

data["EPS"] = await page.locator(

"span:has-text('EPS (TTM)') + span.value"

).text_content()

data["Earnings Date"] = await page.locator(

"span:has-text('Earnings Date') + span.value"

).text_content()

data["Dividend & Yield"] = await page.locator(

"span:has-text('Forward Dividend & Yield') + span.value"

).text_content()

data["Ex-Dividend Date"] = await page.locator(

"span:has-text('Ex-Dividend Date') + span.value"

).text_content()

data["1y Target Est"] = await page.locator(

'[data-field="targetMeanPrice"]'

).text_content()

return data

except Exception as e:

print(f"An error occurred while processing {ticker}: {e}")

return {"Ticker": ticker, "Error": str(e)}

finally:

await context.close()

await browser.close()Python复制

代码通过已识别的CSS选择器来提取数据,使用locator方法定位每个元素,并应用text_content()方法从这些元素中抓取文本。抓取到的指标会存储在一个字典里,字典的每个键对应一个财务指标,而相应的值就是抓取到的文本内容。

最后,定义一个main函数,通过迭代每个代码并收集数据来协调整个过程

async def main():

Define the ticker symbol

ticker = "AAPL" async with async_playwright() as playwright:# Collect data for the ticker

data = await scrape_data(playwright, ticker) print(data)# Run the main functionif name == "main":

asyncio.run(main())“`

Python复制

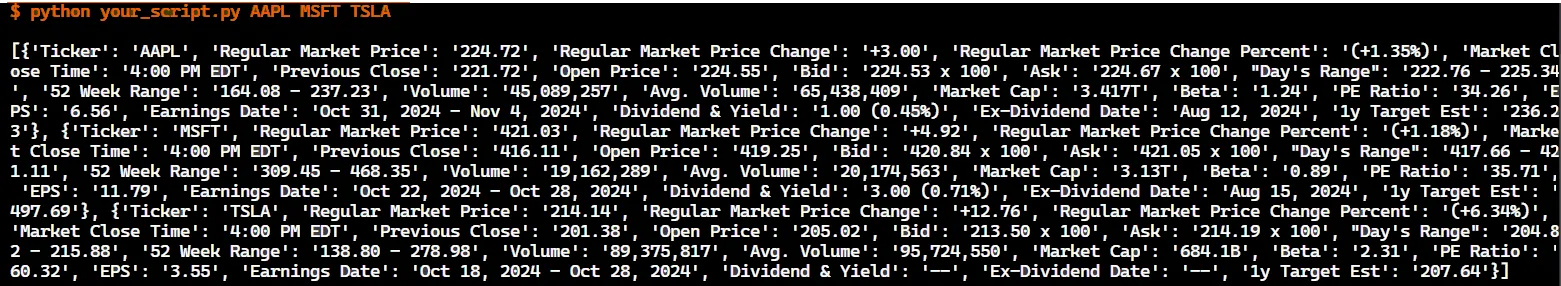

在抓取过程结束时,控制台中会打印以下数据:

6. 抓取历史股票数据

在获取了实时数据之后,我们再来看看雅虎财经提供的历史股票信息。这些数据反映了股票过往的表现,对做出投资决策很有帮助。您可以查询不同时间段的数据,包括日、周、月度数据,比如上个月、去年,甚至是股票的完整历史记录。

要访问 Yahoo Finance 上的历史股票数据,您需要通过修改特定参数来自定义 URL:

frequency:指定数据间隔,例如每日(1d)、每周(1wk)或每月(1mo)。period1和period2:这些参数以 Unix 时间戳格式设置数据的开始和结束日期。

比如,下面这个网址可以查询亚马逊(AMZN)从2023年8月16日到2024年8月16日的每周历史数据:

https://finance.yahoo.com/quote/AMZN/history/?frequency=1wk&period1=1692172771&period2=1723766400纯文本复制

导航到此 URL 后,您将看到一个包含历史数据的表格。在我们的例子中,显示的数据是过去一年的,间隔为一周。

要提取这些数据,您可以使用query_selector_allPlaywright 和 CSS 选择器中的方法.table tbody tr:

rows = await page.query_selector_all(".table tbody tr")Python复制

每行包含多个单元格(标签)来保存数据。以下是从每个单元格中提取文本内容的方法:

for row in rows:

cells = await row.query_selector_all("td")

date = await cells[0].text_content()

open_price = await cells[1].text_content()

high_price = await cells[2].text_content()

low_price = await cells[3].text_content()

close_price = await cells[4].text_content()

adj_close = await cells[5].text_content()

volume = await cells[6].text_content()Python复制

接下来,创建一个函数来生成 Unix 时间戳,我们将使用它来定义数据的开始( period1)和结束( )日期:period2

def get_unix_timestamp(

years_back: int = 0,

months_back: int = 0,

days_back: int = 0

) -> int:

"""Get a Unix timestamp for a specified number of years, months, or days back from today."""

current_time = time.time()

seconds_in_day = 86400

return int(

current_time

- (years_back * 365 + months_back * 30 + days_back) * seconds_in_day

)Python复制

现在,让我们编写一个函数来抓取历史数据:

async def scrape_historical_data(

playwright: Playwright,

ticker: str,

frequency: str,

period1: int,

period2: int

):

url = f"https://finance.yahoo.com/quote/{ticker}/history?frequency={frequency}&period1={period1}&period2={period2}"

browser = await playwright.chromium.launch(headless=True)

context = await browser.new_context()

page = await context.new_page()

await page.goto(url, wait_until="domcontentloaded")

try:

await page.locator("button.accept-all").click()

except:

pass

# Wait for the table to load

await page.wait_for_selector(".table-container")

# Extract table rows

rows = await page.query_selector_all(".table tbody tr")

# Prepare data storage

data = []

for row in rows:

cells = await row.query_selector_all("td")

date = await cells[0].text_content()

open_price = await cells[1].text_content()

high_price = await cells[2].text_content()

low_price = await cells[3].text_content()

close_price = await cells[4].text_content()

adj_close = await cells[5].text_content()

volume = await cells[6].text_content()

# Add row data to list

data.append(

[date, open_price, high_price, low_price, close_price, adj_close, volume]

)

print(data)

await context.close()

await browser.close()

return dataPython复制

该scrape_historical_data函数使用给定的参数构造 Yahoo Finance URL,在管理任何 cookie 提示的同时导航到该页面,等待历史数据表完全加载,然后提取并将相关数据打印到控制台。

最后,我们来看看如何用不同的设置来运行这个脚本:

async def main():

async with async_playwright() as playwright:

ticker = "TSLA"

# Weekly data for last year

period1 = get_unix_timestamp(years_back=1)

period2 = get_unix_timestamp()

weekly_data = await scrape_historical_data(

playwright, ticker, "1wk", period1, period2

)# Run the main function

if __name__ == "__main__":

asyncio.run(main())```

Python复制

通过调整参数来定制数据周期和频率:Daily data for the last month

period1 = get_unix_timestamp(months_back=1)

period2 = get_unix_timestamp()

await scrape_historical_data(playwright, ticker, "1d", period1, period2)

Monthly data for the stock’s lifetime

period1 = 1

period2 = 999999999999

await scrape_historical_data(playwright, ticker, "1mo", period1, period2)“`

Python复制

以下是我们到目前为止编写的,用于从雅虎财经(Yahoo Finance)抓取历史数据的完整脚本:

def get_unix_timestamp(

years_back: int = 0, months_back: int = 0, days_back: int = 0

) -> int:

"""Get a Unix timestamp for a specified number of years, months, or days back from today."""

current_time = time.time()

seconds_in_day = 86400

return int(

current_time

- (years_back * 365 + months_back * 30 + days_back) * seconds_in_day

)

async def scrape_historical_data(

playwright: Playwright, ticker: str, frequency: str, period1: int, period2: int

):

url = f"https://finance.yahoo.com/quote/{ticker}/history?frequency={frequency}&period1={period1}&period2={period2}"

browser = await playwright.chromium.launch(headless=True)

context = await browser.new_context()

page = await context.new_page()

await page.goto(url, wait_until="domcontentloaded")

try:

await page.locator("button.accept-all").click()

except:

pass

# Wait for the table to load

await page.wait_for_selector(".table-container")

# Extract table rows

rows = await page.query_selector_all(".table tbody tr")

# Prepare data storage

data = []

for row in rows:

cells = await row.query_selector_all("td")

date = await cells[0].text_content()

open_price = await cells[1].text_content()

high_price = await cells[2].text_content()

low_price = await cells[3].text_content()

close_price = await cells[4].text_content()

adj_close = await cells[5].text_content()

volume = await cells[6].text_content()

# Add row data to list

data.append(

[date, open_price, high_price, low_price, close_price, adj_close, volume]

)

print(data)

await context.close()

await browser.close()

return data

async def main() -> None:

async with async_playwright() as playwright:

ticker = "TSLA"

# Weekly data for the last year

period1 = get_unix_timestamp(years_back=1)

period2 = get_unix_timestamp()

weekly_data = await scrape_historical_data(

playwright, ticker, "1wk", period1, period2

)

if __name__ == "__main__":

asyncio.run(main())Python复制

运行此脚本根据您指定的参数将所有历史股票数据打印到控制台。

7. 抓取多只股票

到目前为止,我们已经抓取了一只股票的数据。为了同时收集多只股票的数据,我们可以修改脚本以接受股票代码作为命令行参数并处理每只股票。

async def main() -> None:

if len(sys.argv) < 2:

print("Please provide at least one ticker symbol as a command-line argument.")

return

tickers = sys.argv[1:] async with async_playwright() as playwright:# Collect data for all tickers

all_data = []

for ticker in tickers:

data = await scrape_data(playwright, ticker)

all_data.append(data)

print(all_data)# Run the main function

if __name__ == "__main__":

asyncio.run(main())```

Python复制

要运行脚本,请将股票代码作为参数传递:python yahoo_finance_scraper/main.py AAPL MSFT TSLA

壳复制

这将抓取并显示苹果公司 (AAPL)、微软公司 (MSFT) 和特斯拉公司 (TSLA) 的数据。

### 8. 避免被阻止

网站通常会发现并阻止自动抓取。它们使用速率限制、IP 阻止和检查浏览模式。以下是一些在网页抓取时不被发现的有效方法:

__1. 请求之间的随机间隔__

在请求之间添加随机延迟是一种避免检测的简单方法。这种基本方法可以使您的抓取行为对网站来说不那么明显。

以下是如何在 Playwright 脚本中添加随机延迟的方法:async def scrape_data(playwright: Playwright, ticker: str):

browser = await playwright.chromium.launch()

context = await browser.new_context()

page = await context.new_page()

url = f"https://example.com/{ticker}"Example URL

await page.goto(url)Random delay to mimic human-like behavior

await asyncio.sleep(random.uniform(2, 5))Your scraping logic here…

await context.close()

await browser.close()async def main():

async with async_playwright() as playwright:

await scrape_data(playwright, "AAPL")

Example ticker

if name == "main":

asyncio.run(main())

Python复制

该脚本在请求之间引入了 2 到 5 秒的随机延迟,使得操作变得不那么可预测,并降低了被标记为机器人的可能性。

__2. 设置和切换 User-Agent__

网站通常会通过User-[Agent](https://www.explinks.com/wiki/what-is-an-ai-agent/)字符串来识别发出请求的浏览器和设备。通过更换User-Agent字符串,可以让你的爬虫请求看起来像是来自不同的浏览器和设备,这有助于避免被网站检测到。

以下是在 Playwright 中实现 User-Agent 轮换的方法:async def scrape_data(playwright: Playwright, ticker: str) -> None:

browser = await playwright.chromium.launch(headless=True)

context = await browser.new_context()

List of user-agents

user_agents = [

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36",

"Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:91.0) Gecko/20100101 Firefox/91.0",

]Select a random user-agent from the list to rotate between requests

user_agent = random.choice(user_agents)Set the chosen user-agent for the current browser context

context.set_user_agent(user_agent)

page = await context.new_page()

url = f"https://example.com/{ticker}"Example URL with ticker

await page.goto(url)Your scraping logic goes here…

await context.close()

await browser.close()async def main():

async with async_playwright() as playwright:

await scrape_data(playwright, "AAPL")

Example ticker

if name == "main":

asyncio.run(main())

Python复制

此方法使用 User-Agent 字符串列表,并为每个请求随机选择一个。此技术有助于掩盖您的抓取工具的身份并降低被阻止的可能性。

> __注意:__您可以参考

>

> useragentstring.com等网站来获取完整的 User-Agent 字符串列表。

__3. 使用 Playwright-Stealth__

为了降低被检测的风险并提升您的爬虫效果,您可以使用[playwright-stealth](https://github.com/AtuboDad/playwright_stealth)库。这个库运用多种技术手段,让您的爬取行为更接近真实用户的浏览活动。

首先,安装playwright-stealth:

poetry add playwright-stealth

然后,修改脚本:async def scrape_data(playwright: Playwright, ticker: str) -> None:

browser = await playwright.chromium.launch(headless=True)

context = await browser.new_context()

Apply stealth techniques to avoid detection

await stealth_async(context)

page = await context.new_page()

url = f"https://finance.yahoo.com/quote/{ticker}"

await page.goto(url)Your scraping logic here…

await context.close()

await browser.close()async def main():

async with async_playwright() as playwright:

await scrape_data(playwright, "AAPL")

Example ticker

if name == "main":

asyncio.run(main())

Python复制

这些技术可以帮助避免被阻止,但您可能仍会遇到问题。如果是这样,请尝试更高级的方法,例如使用代理、轮换 IP 地址或实施 CAPTCHA 求解器。您可以查看详细指南21 条提示,让您在不被阻止的情况下抓取网站__。__这是您明智地选择代理、对抗 Cloudflare、解决 CAPTCHA、避免诱捕等的必备指南。

#### 又被屏蔽了?Apify Proxy 能帮你解决

通过智能轮换数据中心和住宅 IP 地址来提高抓取工具的性能。

### 9. 将抓取的股票数据导出为 CSV

抓取到所需的股票数据后,下一步就是将其导出为 CSV 文件,以便于分析、与他人共享或导入到其他数据处理工具中。

将提取的数据保存到 CSV 文件的方法如下:import csv

async def main() -> None:

…

async with async_playwright() as playwright:Collect data for all tickers

all_data = []

for ticker in tickers:

data = await scrape_data(playwright, ticker)

all_data.append(data)Define the CSV file name

csv_file = "stock_data.csv"Write the data to a CSV file

with open(csv_file, mode="w", newline="", encoding="utf-8") as file:

writer = csv.DictWriter(file, fieldnames=all_data[0].keys())

writer.writeheader()

writer.writerows(all_data)if name == "main":

asyncio.run(main())

Python复制

代码首先收集每个股票代码的数据。之后,它会创建一个名为的 CSV 文件stock_data.csv。然后,它使用 Python 的csv.DictWriter方法写入数据,首先使用方法写入列标题writeheader(),然后使用方法添加每行数据writerows()。

### 10. 整合所有内容

让我们将所有内容整合到一个脚本中。这个最终代码片段包括从 Yahoo Finance 抓取数据到将其导出到 CSV 文件的所有步骤。

async def scrape_data(playwright: Playwright, ticker: str) -> dict:

"""

Extracts financial data from Yahoo Finance for a given stock ticker.

Args:

playwright (Playwright): The Playwright instance used to control the browser.

ticker (str): The stock ticker symbol to retrieve data for.

Returns:

dict: A dictionary containing the extracted financial data for the given ticker.

"""

try:Launch a headless browser

browser = await playwright.chromium.launch(headless=True)

context = await browser.new_context()

page = await context.new_page()Form the URL using the ticker symbol

url = f"https://finance.yahoo.com/quote/{ticker}"Navigate to the page and wait for the DOM content to load

await page.goto(url, wait_until="domcontentloaded")Try to click the "Accept All" button for cookies, if it exists

try:

await page.locator("button.accept-all").click()

except:

passIf the button is not found, continue without any action

Dictionary to store the extracted data

data = {"Ticker": ticker}Extract regular market values

data["Regular Market Price"] = await page.locator(

'[data-testid="qsp-price"]'

).text_content()

data["Regular Market Price Change"] = await page.locator(

'[data-testid="qsp-price-change"]'

).text_content()

data["Regular Market Price Change Percent"] = await page.locator(

'[data-testid="qsp-price-change-percent"]'

).text_content()Extract market close time

market_close_time = await page.locator(

'div[slot="marketTimeNotice"] > span'

).first.text_content()

data["Market Close Time"] = market_close_time.replace("At close: ", "")Extract other financial metrics

data["Previous Close"] = await page.locator(

'[data-field="regularMarketPreviousClose"]'

).text_content()

data["Open Price"] = await page.locator(

'[data-field="regularMarketOpen"]'

).text_content()

data["Bid"] = await page.locator(

"span:has-text('Bid') + span.value"

).text_content()

data["Ask"] = await page.locator(

"span:has-text('Ask') + span.value"

).text_content()

data["Day's Range"] = await page.locator(

'[data-field="regularMarketDayRange"]'

).text_content()

data["52 Week Range"] = await page.locator(

'[data-field="fiftyTwoWeekRange"]'

).text_content()

data["Volume"] = await page.locator(

'[data-field="regularMarketVolume"]'

).text_content()

data["Avg. Volume"] = await page.locator(

'[data-field="averageVolume"]'

).text_content()

data["Market Cap"] = await page.locator(

'[data-field="marketCap"]'

).text_content()

data["Beta"] = await page.locator(

"span:has-text('Beta (5Y Monthly)') + span.value"

).text_content()

data["PE Ratio"] = await page.locator(

"span:has-text('PE Ratio (TTM)') + span.value"

).text_content()

data["EPS"] = await page.locator(

"span:has-text('EPS (TTM)') + span.value"

).text_content()

data["Earnings Date"] = await page.locator(

"span:has-text('Earnings Date') + span.value"

).text_content()

data["Dividend & Yield"] = await page.locator(

"span:has-text('Forward Dividend & Yield') + span.value"

).text_content()

data["Ex-Dividend Date"] = await page.locator(

"span:has-text('Ex-Dividend Date') + span.value"

).text_content()

data["1y Target Est"] = await page.locator(

'[data-field="targetMeanPrice"]'

).text_content()

return data

except Exception as e:Handle any exceptions and return an error message

print(f"An error occurred while processing {ticker}: {e}")

return {"Ticker": ticker, "Error": str(e)}

finally:Ensure the browser is closed even if an error occurs

await context.close()

await browser.close()async def main() -> None:

"""

Main function to run the Yahoo Finance data extraction for multiple tickers.

Reads ticker symbols from command-line arguments, extracts data for each,

and saves the results to a CSV file.

"""

if len(sys.argv) < 2:

print("Please provide at least one ticker symbol as a command-line argument.")

return

tickers = sys.argv[1:]Use async_playwright context to handle browser automation

async with async_playwright() as playwright:List to store data for all tickers

all_data = []

for ticker in tickers:Extract data for each ticker and add it to the list

data = await scrape_data(playwright, ticker)

all_data.append(data)Define the CSV file name

csv_file = "stock_data.csv"Write the extracted data to a CSV file

with open(csv_file, mode="w", newline="", encoding="utf-8") as file:

writer = csv.DictWriter(file, fieldnames=all_data[0].keys())

writer.writeheader()

writer.writerows(all_data)

print(f"Data for tickers {', '.join(tickers)

} has been saved to {csv_file}")Run the main function using asyncio

if name == "main":

asyncio.run(main())

Python复制

您可以通过提供一个或多个股票代码作为命令行参数从终端运行脚本。python yahoo_finance_scraper/main.py AAPL GOOG TSLA AMZN META

壳复制

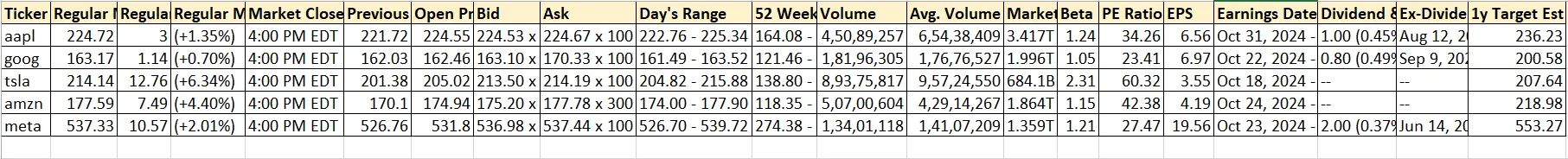

运行脚本后,stock_data.csv将在同一目录中创建名为的 CSV 文件。此文件将以有组织的方式包含所有数据。CSV 文件将如下所示:

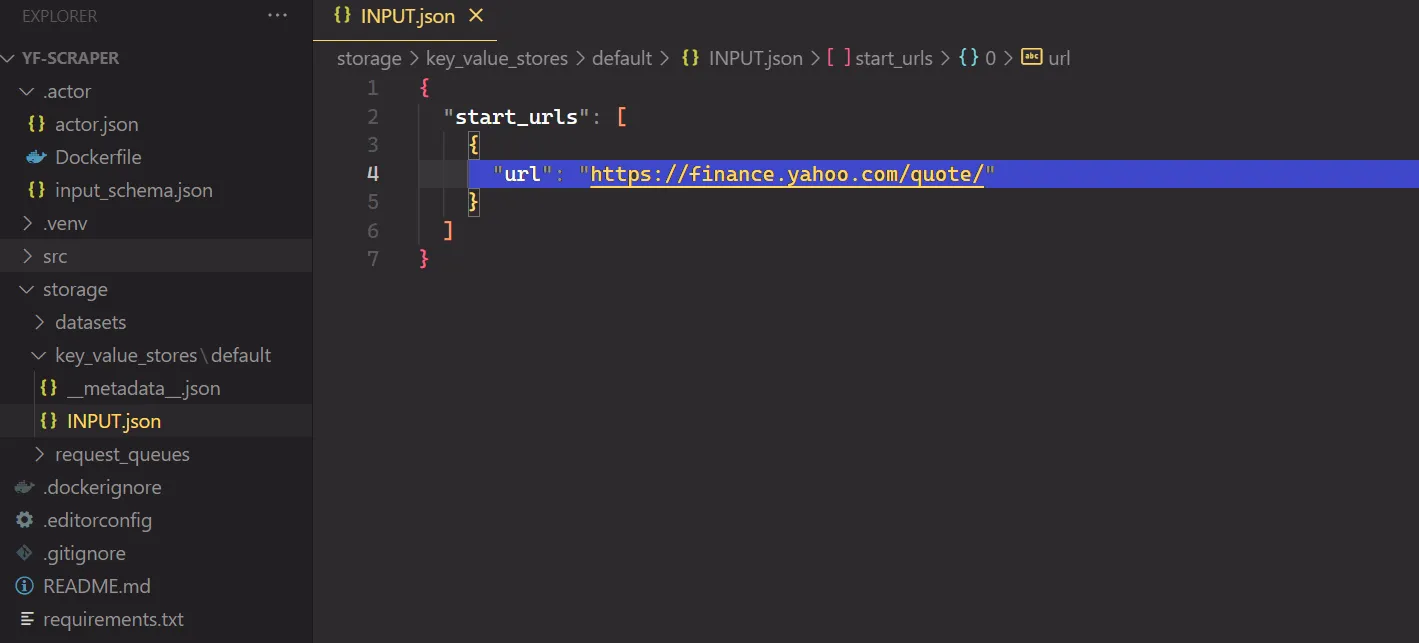

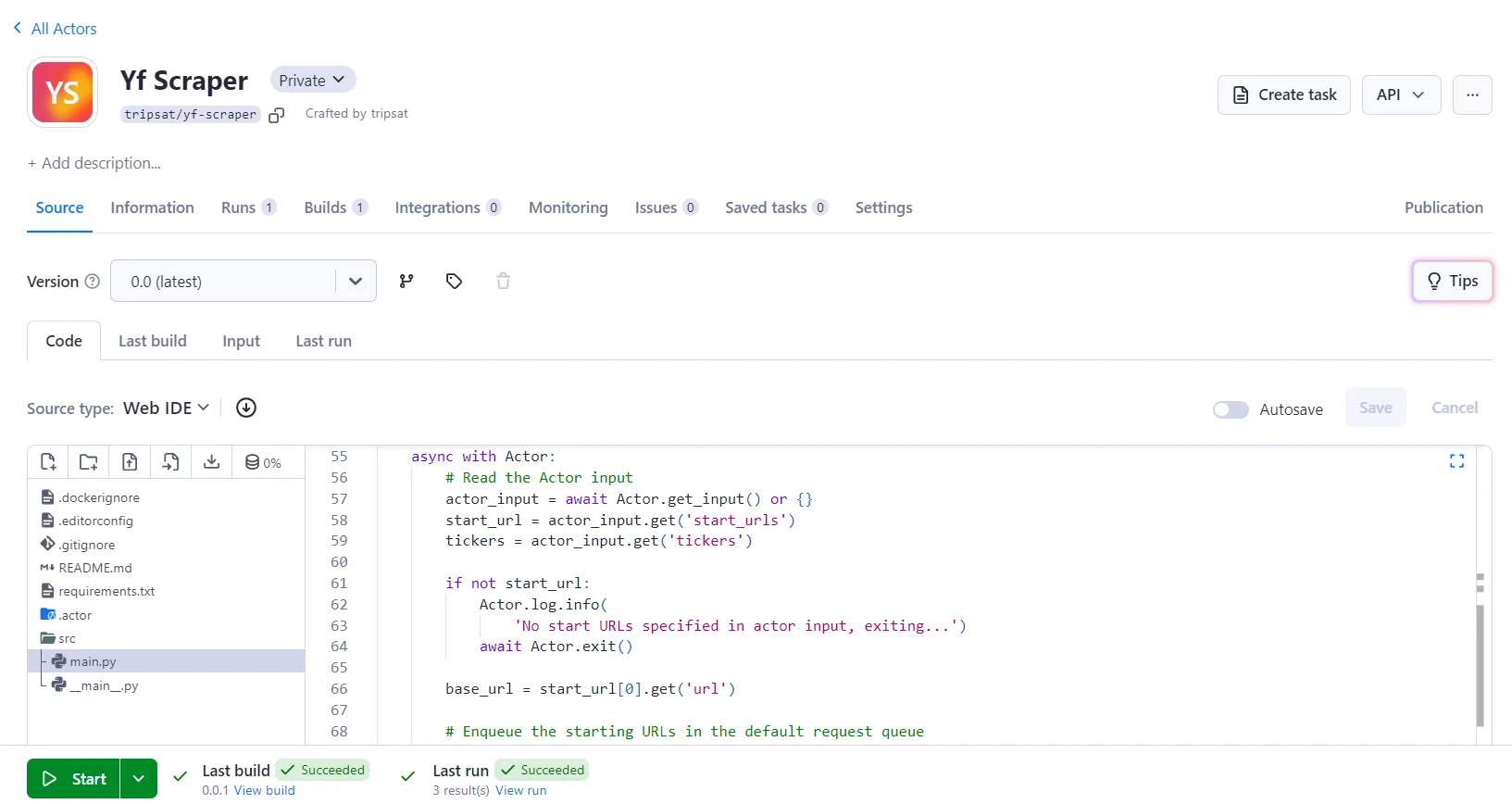

### 11.将代码部署到 Apify

准备好抓取工具后,就可以使用Apify将其部署到云端。这样您就可以按计划运行抓取工具并利用 Apify 的强大功能。对于此任务,我们将使用Python Playwright 模板进行快速设置。在 Apify 上,抓取工具称为Actors。

首先从 Apify Python 模板库克隆Playwright + Chrome模板。[](https://apify.com/templates/categories/python)

首先,您需要安装 Apify CLI,它将帮助您管理 Actor。在 macOS 或 [Linux](https://www.explinks.com/blog/ua-linux-file-operation-commands-explained/) 上,您可以使用 Homebrew 执行此操作:

brew install apify/tap/apify-cli

或者通过 NPM:npm -g install apify-cli

安装 CLI 后,使用 Python __Playwright + Chrome__模板创建一个新的 Actor:apify create yf-scraper -t python-playwright

此命令将在您的目录中设置一个项目yf-scraper。它会安装所有必要的依赖项并提供一些样板代码来帮助您入门。

导航到新项目文件夹并使用您喜欢的代码编辑器将其打开。在此示例中,我使用的是 VS Code:

cd yf-scraper

code .

该模板附带功能齐全的抓取工具。您可以通过运行命令来测试它,apify run以查看其运行情况。结果将保存在 中storage/datasets。

接下来,修改代码src/main.py以使其适合抓取雅虎财经。

修改后的代码如下:

async def extract_stock_data(page, ticker):

data = {"Ticker": ticker}

data["Regular Market Price"] = await page.locator(

'[data-testid="qsp-price"]'

).text_content()

data["Regular Market Price Change"] = await page.locator(

'[data-testid="qsp-price-change"]'

).text_content()

data["Regular Market Price Change Percent"] = await page.locator(

'[data-testid="qsp-price-change-percent"]'

).text_content()

data["Previous Close"] = await page.locator(

'[data-field="regularMarketPreviousClose"]'

).text_content()

data["Open Price"] = await page.locator(

'[data-field="regularMarketOpen"]'

).text_content()

data["Bid"] = await page.locator("span:has-text('Bid') + span.value").text_content()

data["Ask"] = await page.locator("span:has-text('Ask') + span.value").text_content()

data["Day's Range"] = await page.locator(

'[data-field="regularMarketDayRange"]'

).text_content()

data["52 Week Range"] = await page.locator(

'[data-field="fiftyTwoWeekRange"]'

).text_content()

data["Volume"] = await page.locator(

'[data-field="regularMarketVolume"]'

).text_content()

data["Avg. Volume"] = await page.locator(

'[data-field="averageVolume"]'

).text_content()

data["Market Cap"] = await page.locator('[data-field="marketCap"]').text_content()

data["Beta"] = await page.locator(

"span:has-text('Beta (5Y Monthly)') + span.value"

).text_content()

data["PE Ratio"] = await page.locator(

"span:has-text('PE Ratio (TTM)') + span.value"

).text_content()

data["EPS"] = await page.locator(

"span:has-text('EPS (TTM)') + span.value"

).text_content()

data["Earnings Date"] = await page.locator(

"span:has-text('Earnings Date') + span.value"

).text_content()

data["Dividend & Yield"] = await page.locator(

"span:has-text('Forward Dividend & Yield') + span.value"

).text_content()

data["Ex-Dividend Date"] = await page.locator(

"span:has-text('Ex-Dividend Date') + span.value"

).text_content()

data["1y Target Est"] = await page.locator(

'[data-field="targetMeanPrice"]'

).text_content()

return dataasync def main() -> None:

"""

Main function to run the Apify Actor and extract stock data using Playwright.

Reads input configuration from the Actor, enqueues URLs for scraping,

launches Playwright to process requests, and extracts stock data.

"""

async with Actor:Retrieve input parameters

actor_input = await Actor.get_input() or {}

start_urls = actor_input.get("start_urls", [])

tickers = actor_input.get("tickers", [])

if not start_urls:

Actor.log.info(

"No start URLs specified in actor input. Exiting...")

await Actor.exit()

base_url = start_urls[0].get("url", "")Enqueue requests for each ticker

default_queue = await Actor.open_request_queue()

for ticker in tickers:

url = f"{base_url}{ticker}"

await default_queue.add_request({"url": url, "userData": {"depth": 0}})Launch Playwright and open a new browser context

Actor.log.info("Launching Playwright...")

async with async_playwright() as playwright:

browser = await playwright.chromium.launch(headless=Actor.config.headless)

context = await browser.new_context()Process requests from the queue

while request := await default_queue.fetch_next_request():

url = request["url"]

Actor.log.info(f"Scraping {url} ...")

try:Open the URL in a new Playwright page

page = await context.new_page()

await page.goto(url, wait_until="domcontentloaded")Extract the ticker symbol from the URL

ticker = url.rsplit("/", 1)[-1]

data = await extract_stock_data(page, ticker)Push the extracted data to Apify

await Actor.push_data(data)

except Exception as e:

Actor.log.exception(

f"Error extracting data from {url}: {e}")

finally:Ensure the page is closed and the request is marked as handled

await page.close()

await default_queue.mark_request_as_handled(request)在运行代码之前,更新目录input_[schema](https://www.explinks.com/blog/ua-what-is-schema-in-database/).json中的文件.actor/以包含 Yahoo Finance 报价页面 URL 并添加一个tickers字段。

这是更新后的input_schema.json文件:

{

"title": "Python Playwright Scraper",

"type": "object",

"schemaVersion": 1,

"properties": {

"start_urls": {

"title": "Start URLs",

"type": "array",

"description": "URLs to start with",

"prefill": [

{

"url": "https://finance.yahoo.com/quote/"

}

],

"editor": "requestListSources"

},

"tickers": {

"title": "Tickers",

"type": "array",

"description": "List of stock ticker symbols to scrape data for",

"items": {

"type": "string"

},

"prefill": [

"AAPL",

"GOOGL",

"AMZN"

],

"editor": "stringList"

},

"max_depth": {

"title": "Maximum depth",

"type": "integer",

"description": "Depth to which to scrape to",

"default": 1

}

},

"required": [

"start_urls",

"tickers"

]

}

JSON复制

此外,input.json通过将 URL 更改为 Yahoo Finance 页面来更新文件,以防止执行期间发生冲突,或者您可以直接删除此文件。

要运行你的 Actor,请在终端中运行以下命令:

apify run

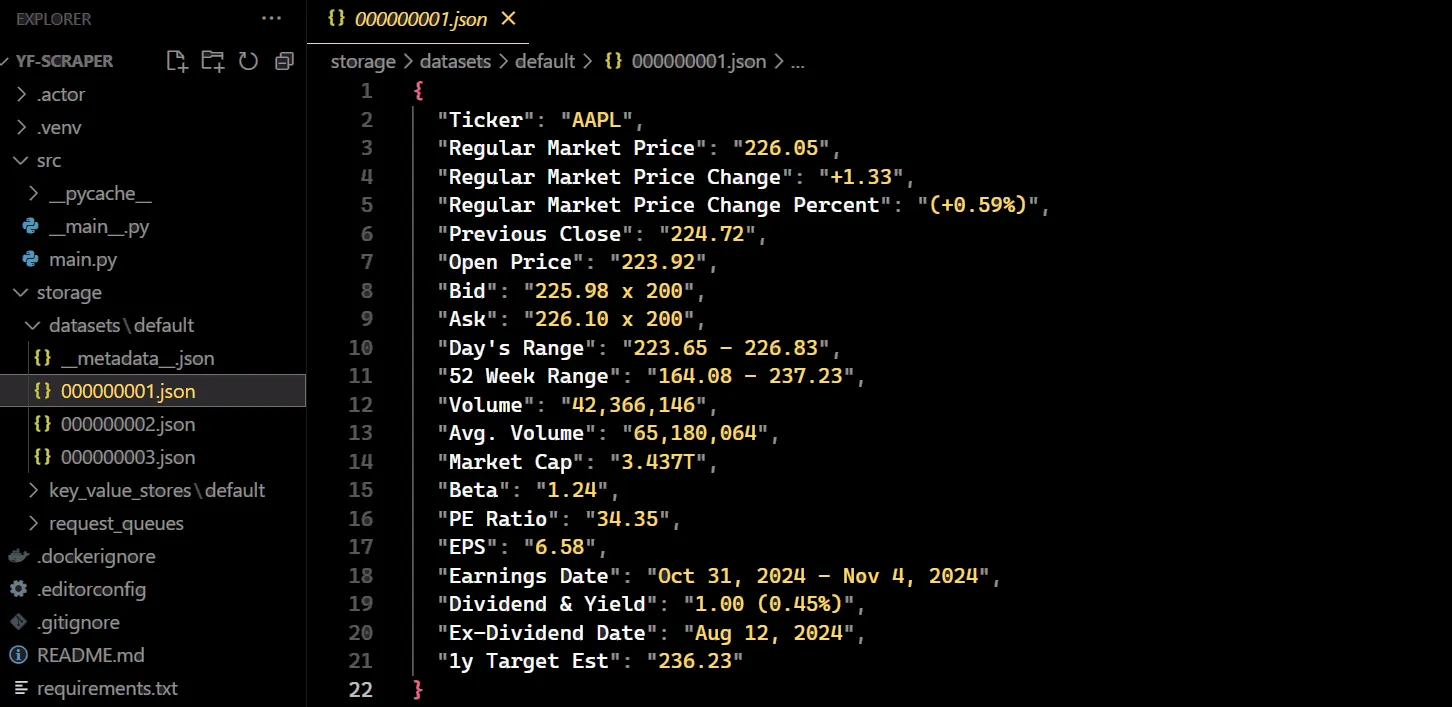

抓取的结果将保存在 中storage/datasets,其中每个股票代码都有自己的 JSON 文件,如下所示:

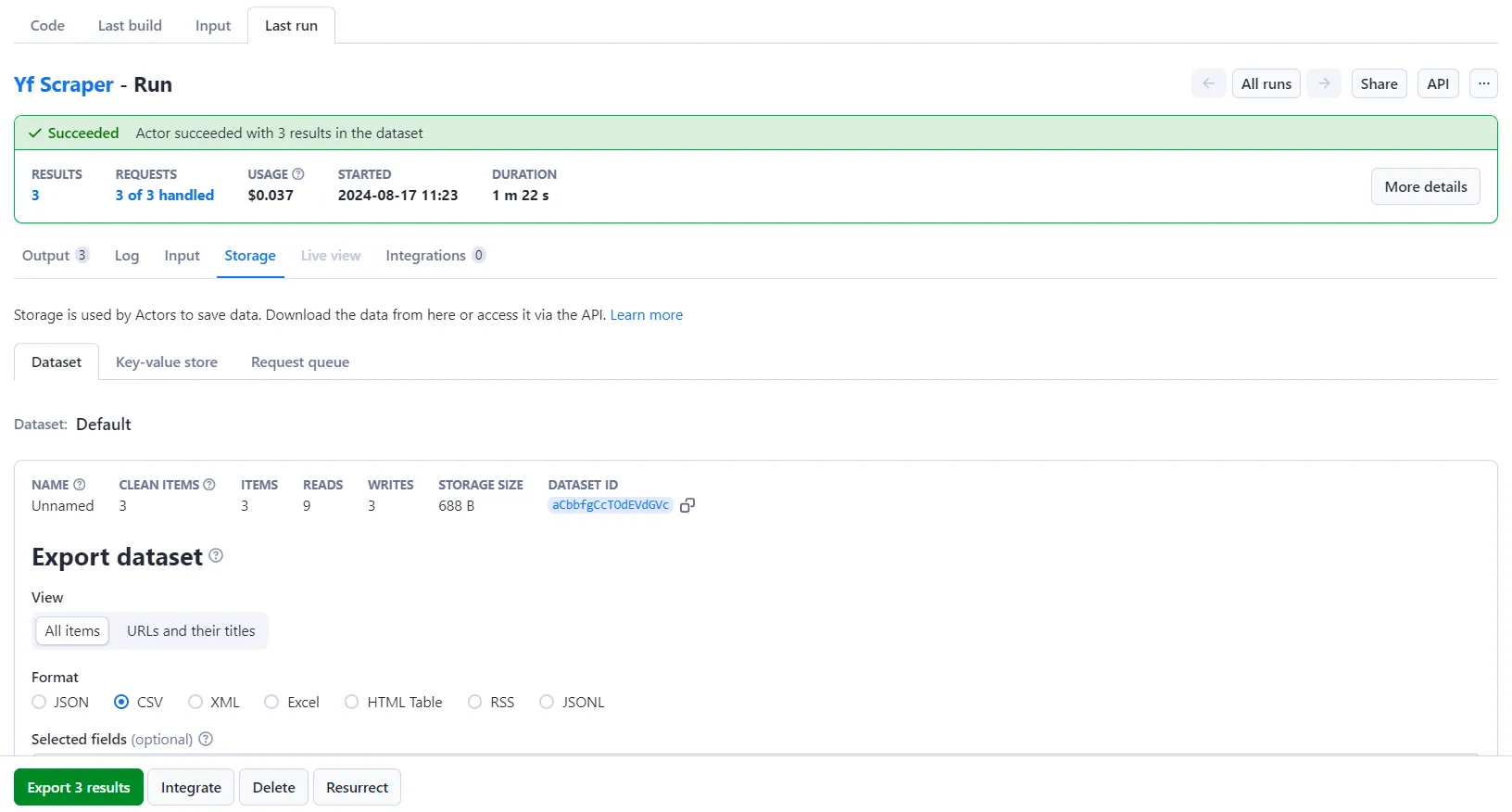

要部署您的 Actor,请先创建一个 Apify 帐户(如果您还没有)。然后,从 Apify 控制台的“设置 → 集成”下获取您的 API 令牌,最后使用以下命令使用您的令牌登录:

apify login -t YOUR_APIFY_TOKEN

最后,将您的 Actor 推送到 Apify:apify push

片刻之后,你的 Actor 应该会出现在 Apify 控制台的Actors → My actors下。

您的抓取工具现已准备好在 Apify 平台上运行。点击“__开始__”按钮即可开始。运行完成后,您可以从“__存储__”选项卡预览和下载各种格式的数据。

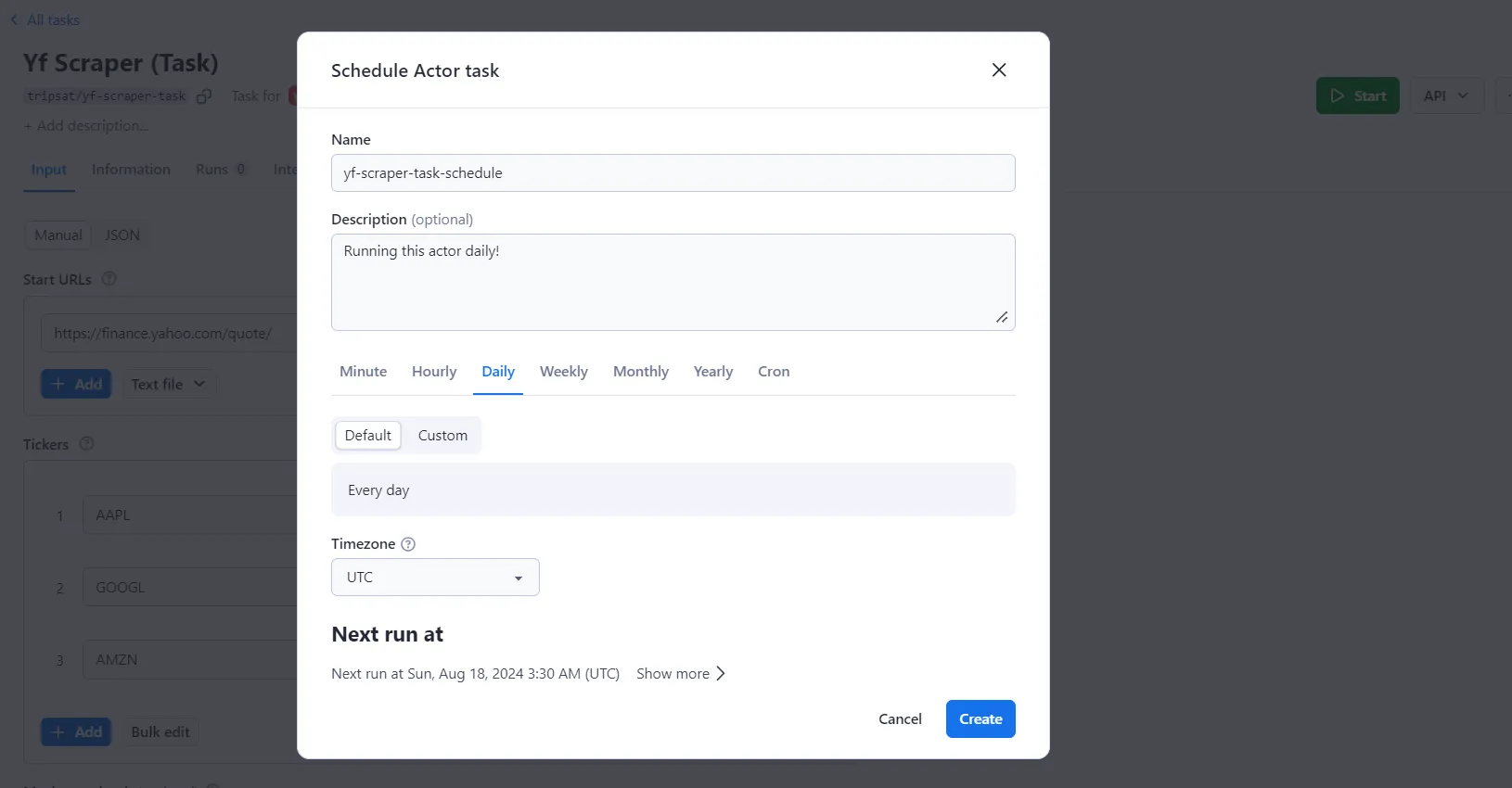

__额外好处:__在 Apify 上运行抓取工具的一个主要优势是可以为同一个 Actor 保存不同的配置并设置自动调度。让我们为我们的 Playwright Actor 设置这个。

在Actor页面上,点击 __创建空任务。__

接下来,单击 __“操作”__ ,然后 单击__“计划”__。

最后,选择你希望 Actor 运行的频率并点击 __“创建”__。

要开始在 Apify 平台上使用 Python 进行抓取,您可以使用Python 代码模板。这些模板适用于流行的库,例如 Requests、Beautiful Soup、Scrapy、Playwright 和 Selenium。使用这些模板,您可以快速构建用于各种 Web 抓取任务的抓取工具。

使用代码模板快速构建抓取工具

## 雅虎财经有 API 吗?

[Yahoo Finance](https://www.explinks.com/api/fintech_yahoo_finance_public_data) 提供免费 API,让用户可以访问大量财务信息。其中包括实时股票报价、历史市场数据和最新财经新闻。该 API 提供各种端点,允许您以 JSON、CSV 和 XML 等不同格式检索信息。您可以轻松地将数据集成到您的项目中,以最适合您需求的方式使用它。

## 雅虎财经开始营业

您已经构建了一个实用的系统,使用 Playwright 从 Yahoo Finance 中提取财务数据。此代码处理多个股票代码并将结果保存到 CSV 文件中。您已经学会了如何绕过拦截机制,让您的抓取工具保持正常运行。

原文链接:最新文章

- 从零开始的机器学习实践指南

- 解析2024年Gartner® API保护市场指南

- Go工程化(五) API 设计下: 基于 protobuf 自动生成 gin 代码

- 如何在Java、Python、PHP中使用会员短信API?

- 探索物种世界:中科院生物百科API的强大功能

- 如何在 Facebook Developers 上设置 WhatsApp Cloud API

- 解决REST API常见问题:问题清单及解答一览

- OpenAI的API有哪些功能?

- 为开源项目 go-gin-api 增加 WebSocket 模块

- Python调用股票API获取实时数据

- API请求 – 什么是API请求?

- 给初学者的RESTful API 安全设计指南!